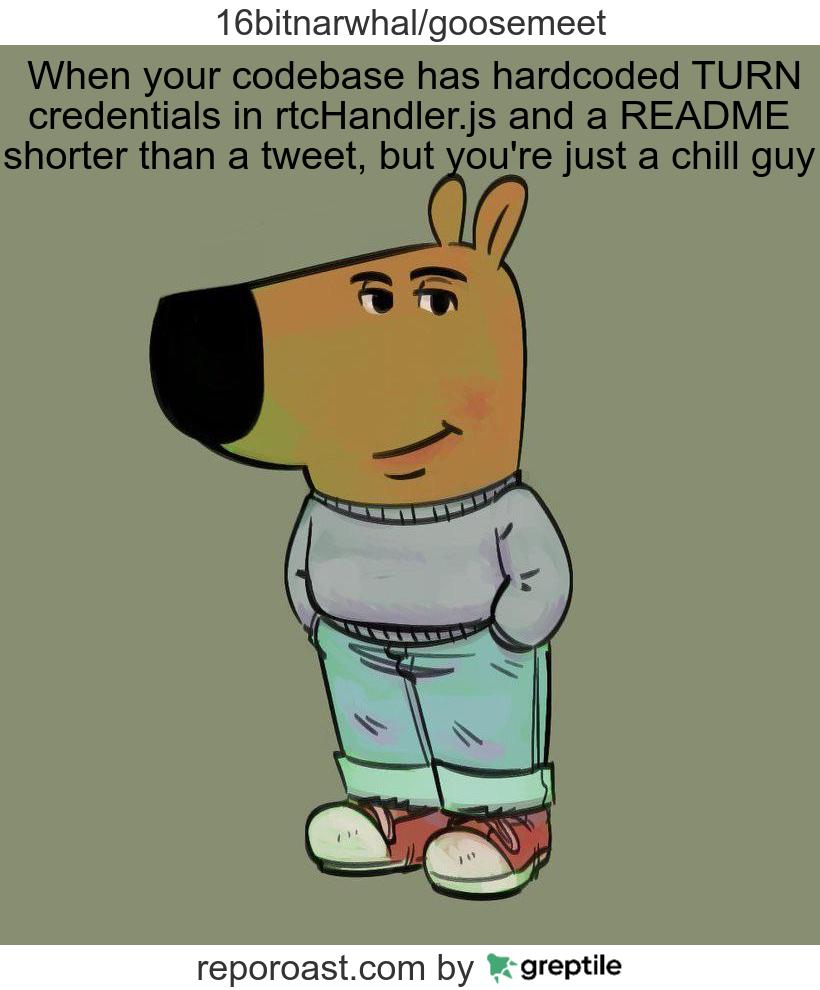

Hi! I'm Eric (@16BitNarwhal). I am an undergrad at the University of Waterloo and I recently spent a semester interning at Greptile. I built reporoast.com, a site that uses the Greptile API and large language models (LLMs) to generate memes that roast your GitHub repo.

When I started building this, I thought it'd be simple - use Greptile to analyze codebase and maybe some type of image generation model to generate the meme.

It turned out to be far more complicated for a few reasons:

- It was difficult to find roast-worthy mistakes in the codebase.

- The LLM was simply not funny.

- The image generation models struggled with meme formats and text.

Here is an overview of what did and didn't work.

Generating repo-specific roasts

I started directly querying Greptile's API to analyse a code repository and generate roasts for it.

Here was my initial request:

curl --request POST \

--url https://api.greptile.com/v2/query \

--header 'Authorization: Bearer <GREPTILE_API_KEY>' \

--header 'Content-Type: application/json' \

--header 'X-GitHub-Token: <GITHUB_TOKEN>' \

--data '{

"messages": [

{

"content": "Roast this repository in a light-hearted way"

}

],

"repositories": [

{

"remote": "github",

"branch": "main",

"repository": "16BitNarwhal/micppgrad"

}

]

}'

Disclaimer: this is really really long and also has a disclaimer. Yeah, I don't think this would work in a meme.

{"message":"Oh boy, let me roast this repository! 🔥\n\nAh yes, automatic differentiation in C++ - because

apparently regular differentiation wasn't complicated enough! This is what happens when math nerds decide to make

calculus even more intimidating by wrapping it in layers of C++ templates. It's like they looked at derivatives and

thought \"You know what this needs? More pointer arithmetic!\"\n\nNeural networks and automatic differentiation...

a match made in complexity heaven! It's the kind of project where even the debugger needs therapy sessions. I bet

the error messages are longer than a CVS receipt.\n\nThe fact that it has a README is like putting a \"Warning:

Hot\" label on the sun - technically helpful, but we all know we're in for pain anyway. It's probably full of

mathematical equations that look like someone fell asleep on their keyboard while holding down the Shift key

\n\nThis is the kind of repository where \"undefined behavior\" isn't a bug - it's a feature! Because who needs

predictable results when you can have the excitement of wondering whether your gradients are actually calculating

derivatives or accidentally solving world peace?\n\nBut hey, at least it's not written in Brainfuck... though

sometimes C++ template metaprogramming makes that look like a viable alternative! 😂\n\nRemember folks: If you

understand this repository on the first try, you're either lying or you're the person who wrote it (and even then,

I have my doubts)! \n\n*Disclaimer: This roast was written with love and respect for the brave souls who venture

into the depths of automatic differentiation. May your stack traces be short and your compile times reasonable!*

😉","sources":[]}

To keep it short and remove any extra details that wouldn't be directly part of the meme, I added this to the prompt:

Do not use emojis or any other special characters and do not have any introductory or concluding statements or

conversational extras.

Format your response as a single concise roast for a meme.

This seems a bit better, it's short and people actually have the attention span to read it:

Ah yes, another repository where developers spent more time writing documentation about how to write

documentation than actually writing code. The ultimate meta-procrastination project.

But, the output feels a bit generic. You could insert it into any repo and it would still work. I want something specific that directly references the repository. The best way I thought of doing this was to beg Claude to be specific:

Please include specific details like:

- Real file names and paths (e.g. "src/utils/hacky-workaround.js")

- Specific error patterns or anti-patterns found in the code

- Real issue numbers or PR comments

- Concrete examples of problematic implementations

Focus on concrete, specific issues rather than general observations.

Now I got mixed results. Sometimes it would work and sometimes it would fail to find relevant information in the codebase:

'The tests in test_value.cpp are more like a wish list of what should work rather than actual tests.'

and

"I apologize, but I don't see any actual code files, paths, or repository content provided in the context..."

I think this didn't work sometimes because I asked Greptile to do too much. Greptile "specializes in" analysing codebases but I was making it generate roasts which probably interfered with Greptile when retrieving information from the codebase.

To fix this, I split generating roasts into 2 tasks - analysis and roast - so that Greptile can focus solely on codebase analysis.

Seperating issue discovery and roast generation

For the analysis, I prompted Greptile's API to identify weaknesses of the repository. For example:

Look at many source code files and list 5 of the most egregious issues about the code itself.

Directly reference the code and files if possible.

Then list 5 issues about the repo itself. Do not list generic issues, try to list issues that

are highly specific to this repo.

The response generally looked something like this:

Code Issues:

- In src/value.cpp, the backprop() implementation uses a recursive lambda function for topological sort which could cause stack overflow with deep computation graphs

- Neural network weight initialization in src/neural_network.cpp uses uniform random distribution [-1,1] rather than proper Xavier/He initialization, leading to potential gradient vanishing/exploding

- The Value class in src/value.cpp stores gradients as raw doubles without handling potential numerical overflow/underflow issues during backpropagation

- In src/main.cpp, the training loop lacks any early stopping criteria or learning rate scheduling, making training potentially unstable

- The MultiLayerPerceptron class in src/neural_network.cpp lacks any regularization capabilities like dropout or weight decay

Repository Issues:

- No continuous integration setup or automated testing workflow despite having a test suite in tests/test_value.cpp

- Documentation in README.md only shows usage examples but lacks mathematical explanations of the automatic differentiation implementation

- Visualization script graph.py requires manual installation of Python dependencies without proper requirements.txt

- run.sh build script lacks proper error handling for CMake configuration failures

- No versioning or release tags despite having significant functionality changes across commits

Although not in "roast-y" format yet, this produced very specific issues directly from the repo itself.

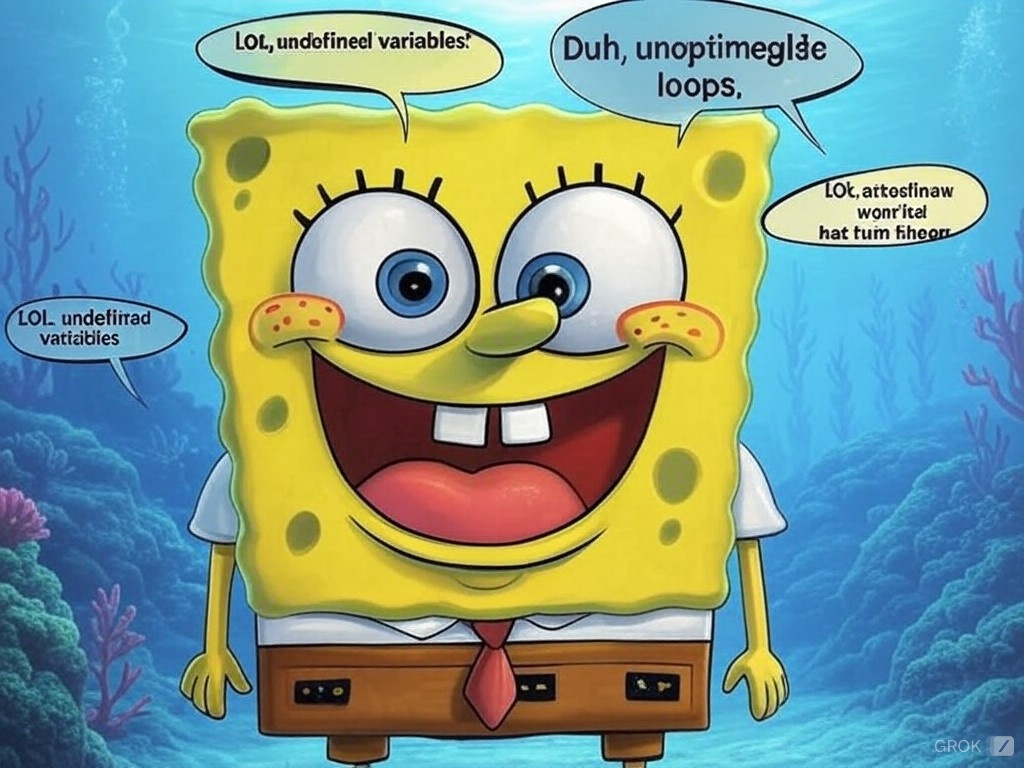

For the roast generating part, I wanted to eventually create meme images from these, so I prompted an LLM (Claude in this case) to roast the repo with text bubbles along with the previous analysis results as an input.

Here's the prompt I used

Write a single short and concise meme using issues with this repository.

Repository Context: ${analysisResults}

Include specific details like:

- Real file names and paths (e.g. "src/utils/hacky-workaround.js")

- Specific error patterns or anti-patterns found in the code

- Real issue numbers or PR comments

- Concrete examples of problematic implementations

Maintain technical accuracy while being humorous

Keep within meme format style (brief, punchy, casual tone)

No introduction, explanation, or additional commentary needed.

Output:

Me: "Uniform random [-1,1] in neural_network.cpp"

My neural net: *exploding gradients*

Time to generate an image.

Generating memes with image generation models

My first instinct was to use image generation models to generate memes. It quickly turned out that image models struggled with text, which is necessary for memes.

Yeah, maybe this isn't the best. As far as I know, image generation models tend to struggle with text, which is necessary for memes. Another potential issue was just the cost of image generation.

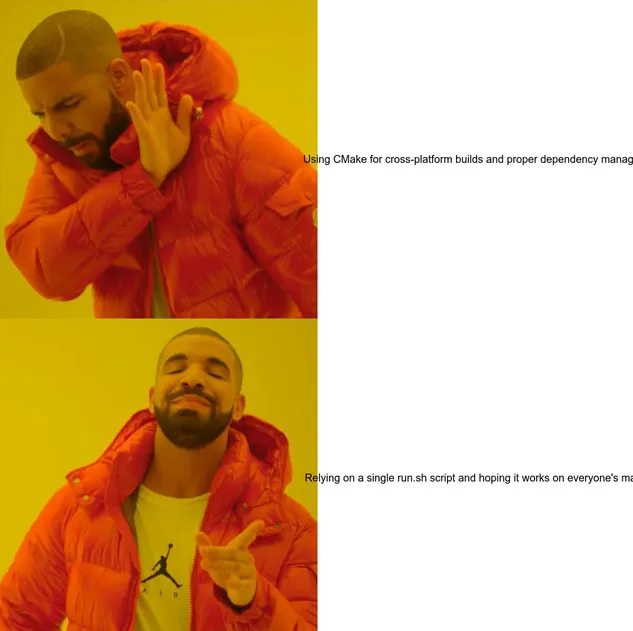

So I tried using a simpler solution: hardcoded meme templates.

I downloaded popular meme images, but with empty text. Then, I hardcoded rectangular positions in each image to put text in.

For example:

bubbleRects: [

createRect(648, 1176, 24, 576), // upper text bubble

createRect(648, 1176, 624, 1176) // lower text bubble

]

Now for the text that actually goes into these images, I had to revisit the LLM that generates roasts. For each image template, I had a different "meme image description" that was added to the prompt. For example

The image has 2 rows with Drake rejecting the first text bubble and Drake happyly accepting the second text bubble. Format your response as a JSON array of strings. Make sure the array is has length 2, representing the number of

text bubbles.

I parsed this list and used node-canvas to insert text directly into these template images.

Another problem: the LLM doesn't always give me a list and it doesn't always match the length! Sometimes I would get something that can't be parsed like "Here is your list: [A, B]" or a list that uses the wrong size like "[A, B, C, D]". So, I made a retry policy where each time it failed, I reprompted the LLM with its previous response and added on "This isn't a JSON formatted array. Try again" or "The array must be size 2. Try again".

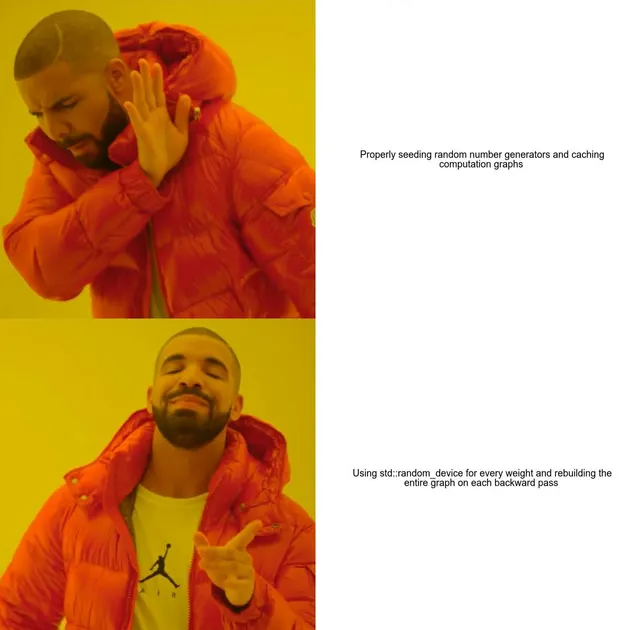

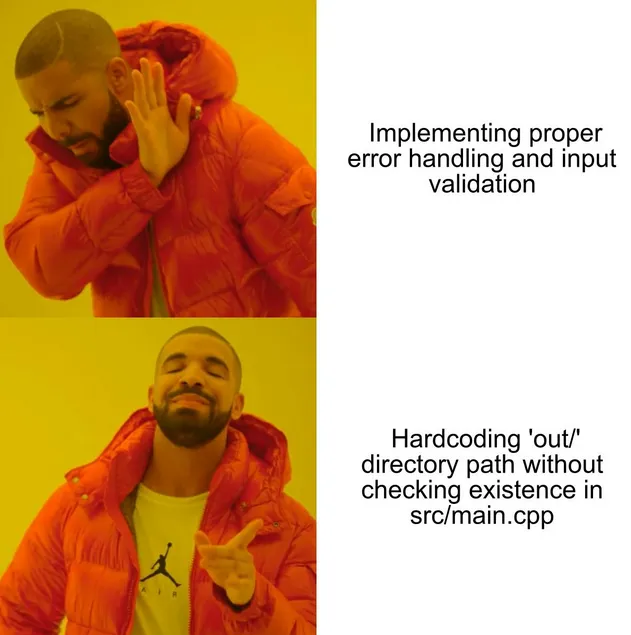

Anyway here's the output:

This doesn't look good. At all. I mean the text is there at least. I should probably add word wrap:

And make the text as large as possible, I brute-force all font sizes until it's the text is too large and overflows.

This is a bit on the hackier side, but it works and keeps the text consistent:

Remarks

While there were a few bumps and hacky implementations along the way, I had a ton of fun building an app designed solely to roast projects.

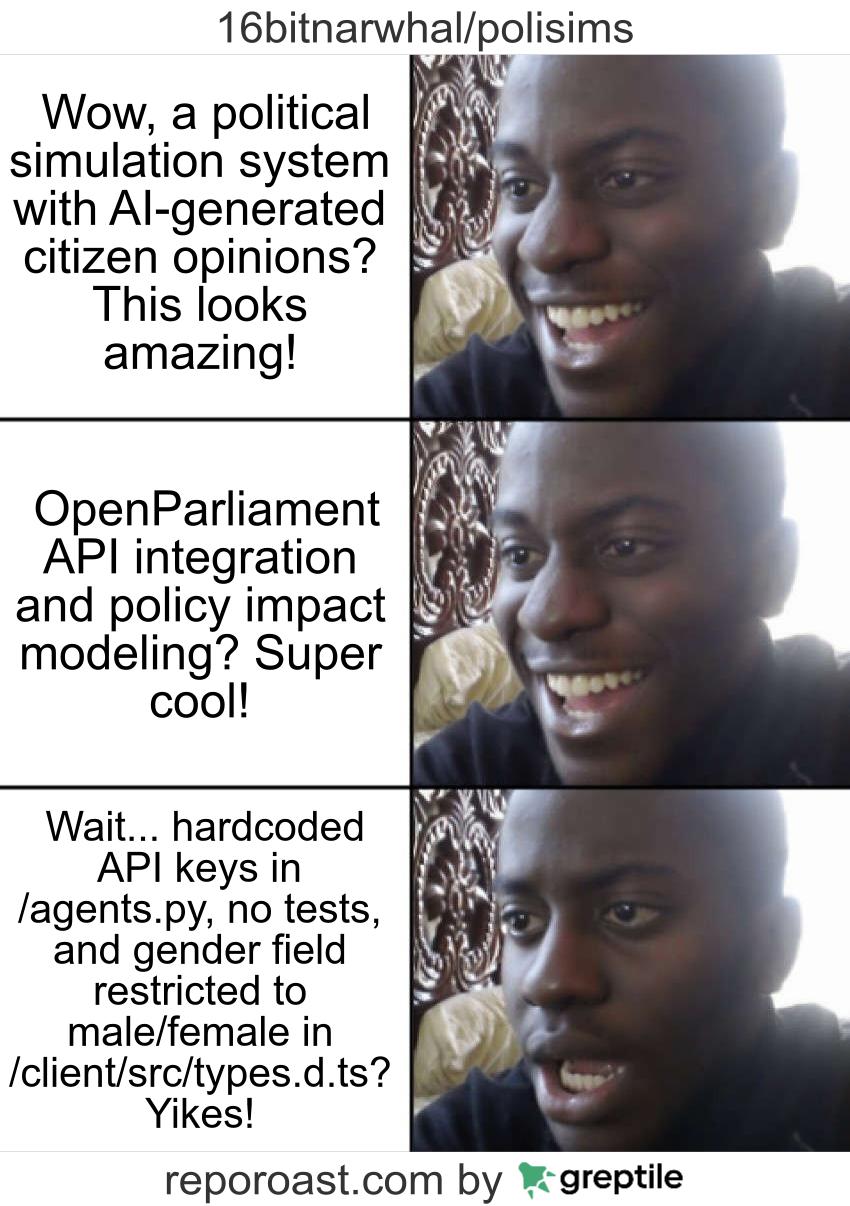

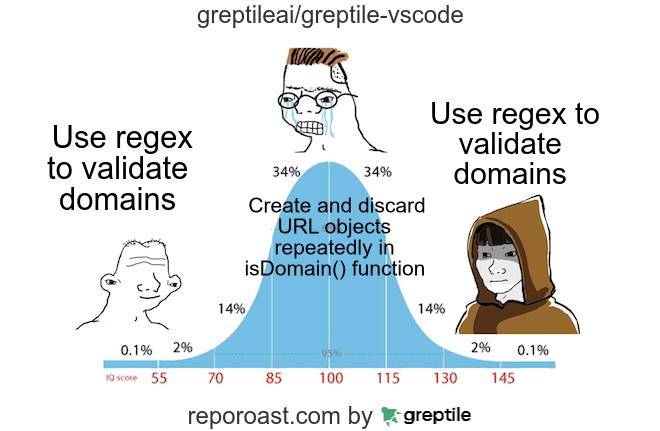

Here are some finished outputs:

You cam make your own memes at reporoast.com and let me know what you think!