I’m Vaishant, one of the co-founders of Greptile - an AI that does a first-pass review of pull requests with complete context of the codebase. Teams use it because it helps them review PRs faster and find more bugs and antipatterns. As a result, we spend a lot of time studying PRs. We study how long they tend to stay open, what affects how long they stay open, the size of a typical PR, etc.

Recently, I decided to study ~24,300 merged PRs from ~100 engineering orgs of different sizes, ranging from 10 to 500 engineers. I picked a fairly balanced sample so orgs of different sizes were equally represented. There were a handful of interesting patterns I observed and documented with some possible explanations here.

Some notes about this data sample:

| Metric | Mean | 25th %ile | 50th %ile | 75th %ile | Max |

|---|---|---|---|---|---|

| Changed Files | 10.3 | 1 | 3 | 9 | 631 |

| Additions | 368 | 6 | 38 | 193 | 99.1k |

| Deletions | 213 | 2 | 10 | 55 | 76.1k |

How long do PRs tend to stay open?

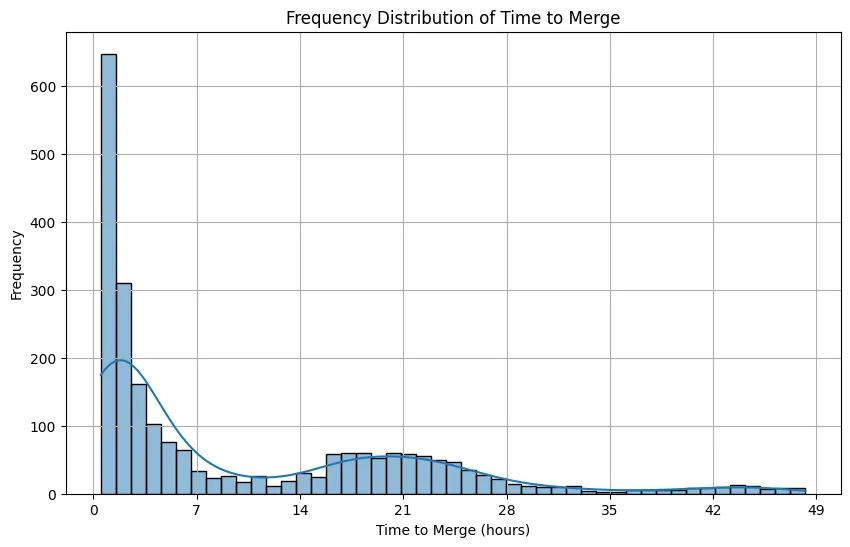

The mean time to merge across the 25,000 PRs was 10.10 hours. The median time to merge was 3.84 hours.

Many PRs get merged within an hour, after which the frequency goes down with every hour. There is a bump at the 21 hour mark, probably from engineers merging and clearing out the previous day’s review backlog. There were virtually no merged PRs that had stayed open more than 48 hours.

Do Larger, More Complex PRs Get Merged Faster?

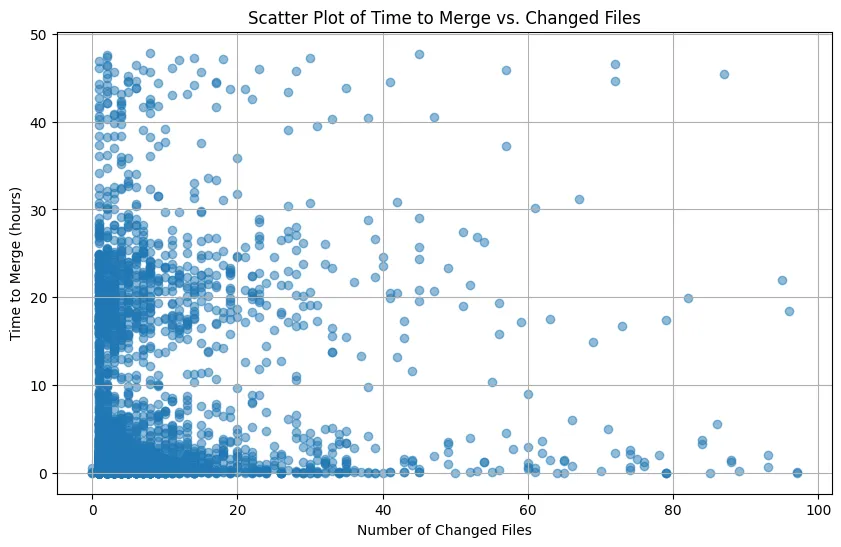

It’s important to start by defining what we mean by large PRs. One way to measure it is by the number of files changed.

This is not a terrible proxy for size but might actually be a better approximation of PR complexity. Generally speaking, a change that touches more files is a more “complex change”.

This scatter plot seems to indicate there is a generally inverse correlation here. The more files changed in a PR, the more likely it is that it gets merged relatively quickly.

Some theories:

Decay speed: PRs that change more files are likely to decay more quickly, as other commits create merge conflicts. Therefore, there is greater urgency in merging a larger PR.

Blocking PRs: related - larger PRs are more likely to block other engineers, so reviewing them might be a higher priority.

Lazy review: It’s possible that we tend to get lazy while reviewing larger PRs, and so while smaller PRs get many comments, larger ones don’t face that level of scrutiny, getting merged faster.

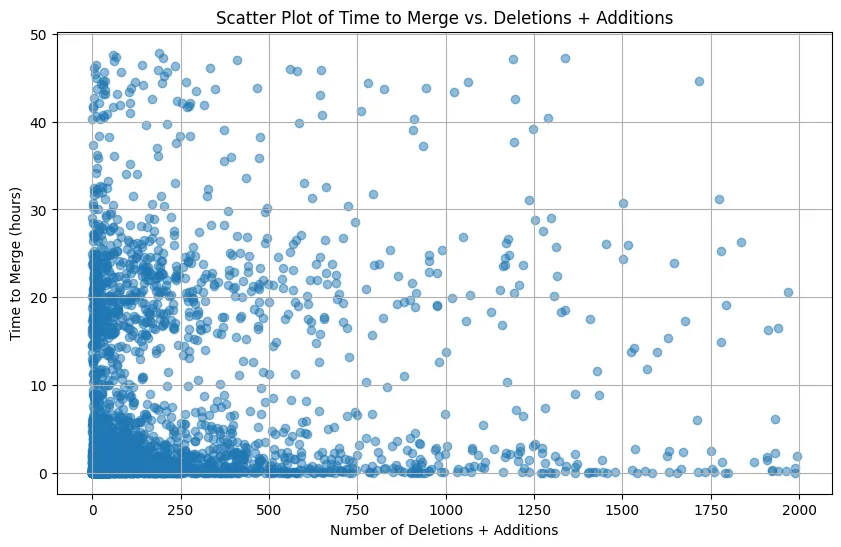

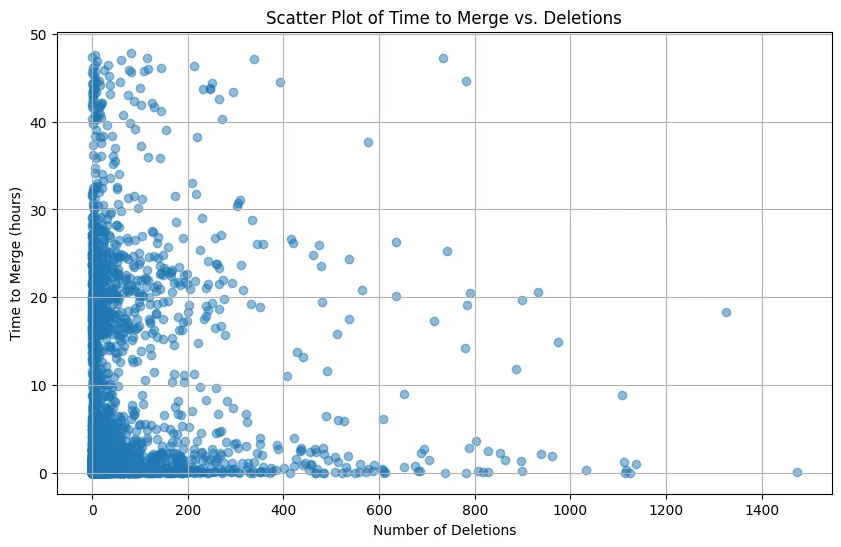

The sum of additions and deletions is probably a better measure of a PRs “size”. We see a similar trend here - generally an inverse relationship between the total number of changes and the time to merge.

A lot of the same reasons as above likely apply here too. PRs with more changes make reviewers lazy, or they are blocking changes and are reviewed with urgency.

Something that explains the outliers (enormous PRs that were merged super fast) could be that those are programmatic PRs, for example running prettier ––write or something similar recursively across an entire directory.

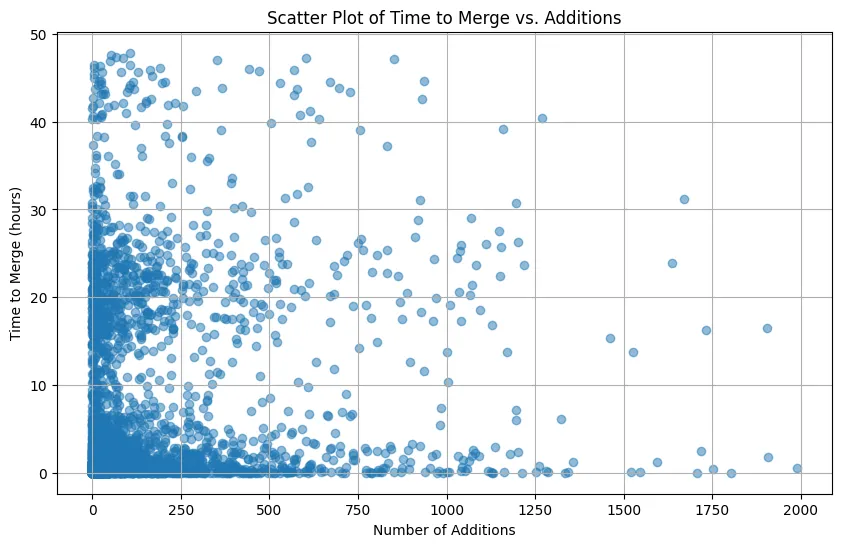

The results are not too different when you isolate additions or deletions either.

One interesting observation is the bump in the middle of the y-axis in each of these graphs. We know there is a bump in PR merge times around that mark (21 hours after open). It’s interesting to see that the average size of PRs that were pushed to the next day were usually larger.

While this may be an over-extrapolation, it’s possible that the pattern is something like this:

Merged within 2 hours: Large critical PRs, small hotfixes

Merged in 3-8 hours: Medium-sized PRs

Merged the next day: Large PRs that had some back and forth before merge. Comments from the first review were likely resolved later that day, final review and merge happened next morning.

Merged >24 hours later: Not many large PRs here, just small-medium-sized, non-blocking, non-critical PRs.

The classic programmer meme is that small changes get many comments and large changes get very few. Turns out, this is at least somewhat grounded in reality.

Do Smaller Teams Ship More/Bigger PRs?

There is an old truism that says that small teams of software developers ship faster than large ones. This is the premise behind small companies outcompeting large ones, and large companies organizing their software orgs into small, decoupled teams of developers.

From our data, it wasn’t trivial to extrapolate which developers work together on one cohesive team, so I had to use some imperfect analysis. Generally speaking, the set of developers contributing more than once a week to a particular repo can probably be considered members of the same team. It is likely their work is not particularly decoupled. The size of a team, therefore, is the number of weekly active PR authors on that team’s repo. The exception is monorepos, since many decoupled teams might contribute to them. I decided to exclude monorepos from the analysis entirely.

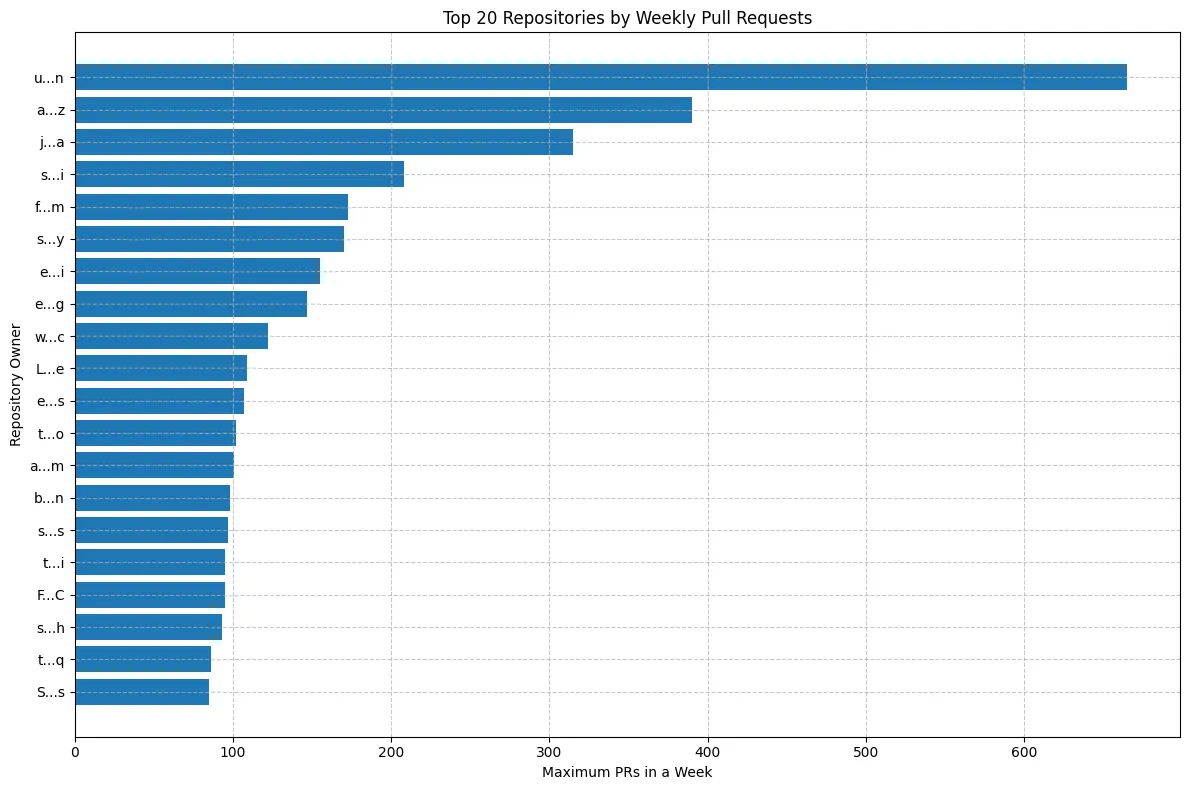

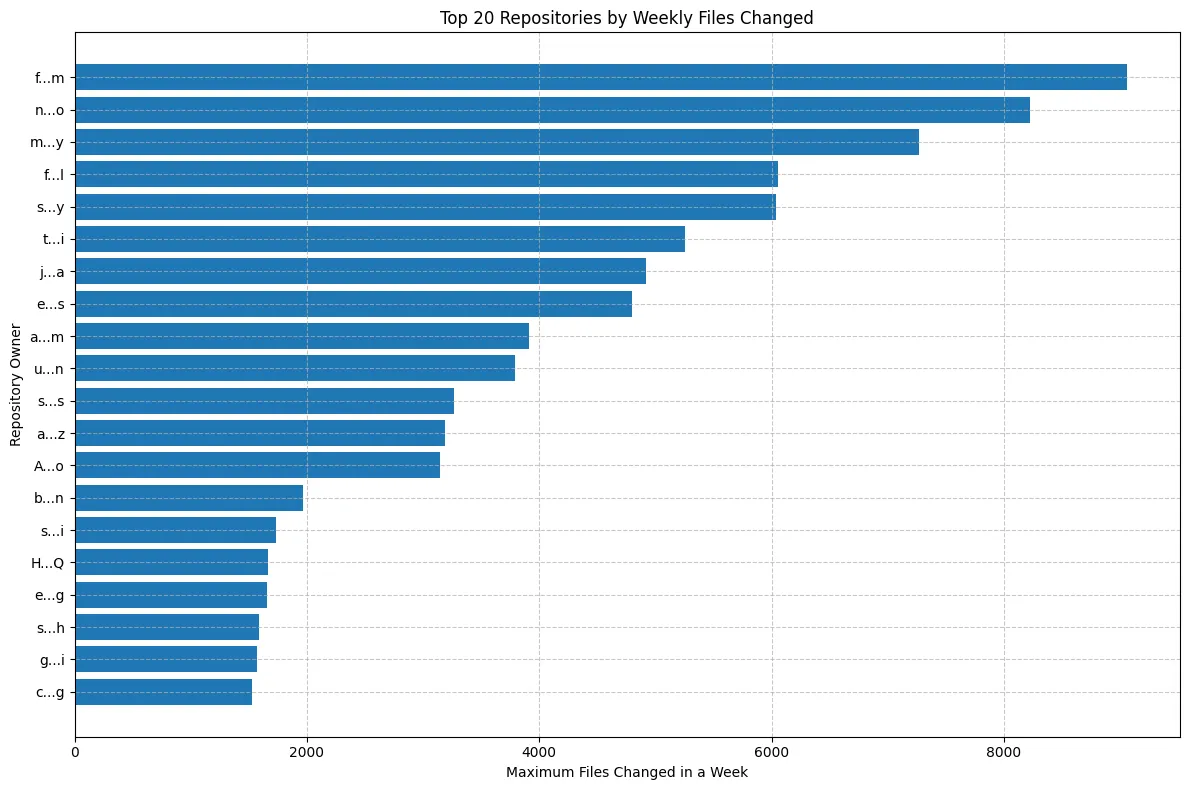

Some Teams Ship Much More Than Others

First, I wanted to see what variations exist in general when it comes to PR activity across different companies. The repo names are hidden for privacy.

There seems to be some type of power law, where certain companies make many more PRs than others. This power law does get weaker when we measure files changed instead of the number of PRs opened.

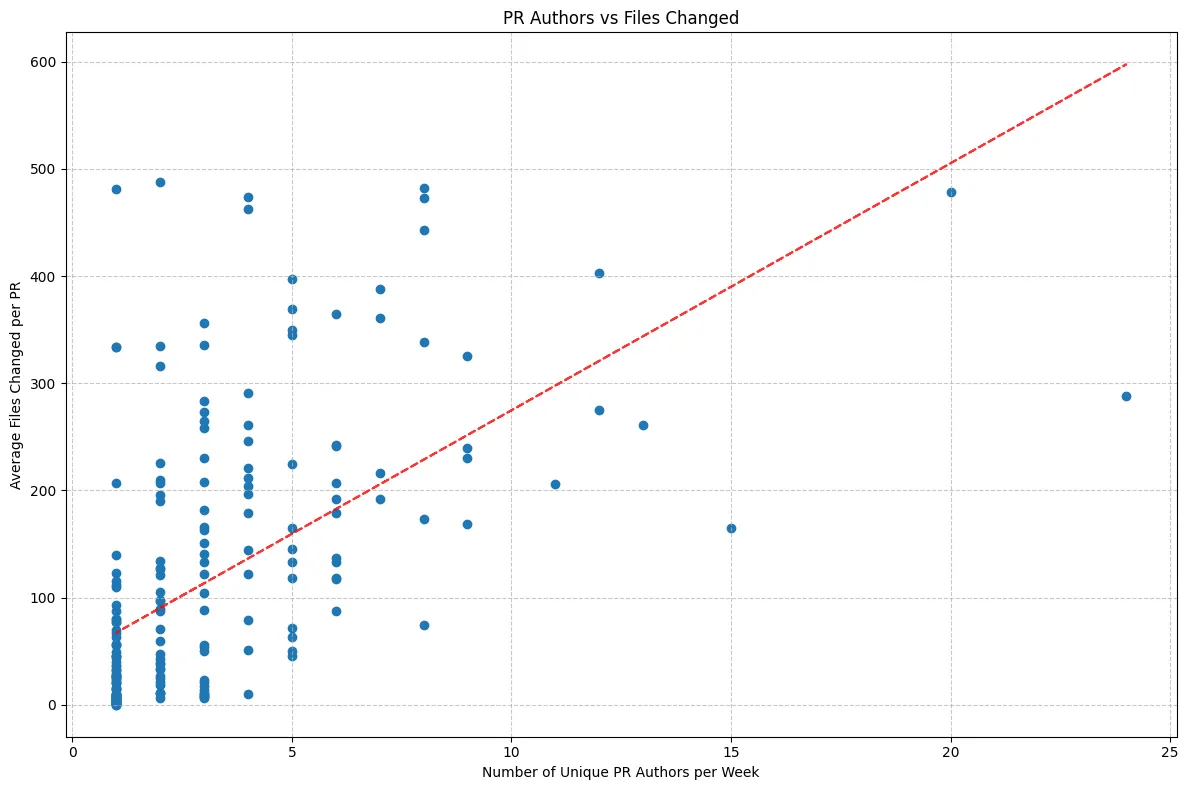

Now to see how team size affects all of this, I wanted to see how the quantity of code changes varied with the number of weekly unique authors contributing to a certain repo.

Bigger Teams Write Bigger PRs

First, I wanted to see how weekly unique authors correlated with weekly files changed across PRs. To my surprise, larger teams wrote larger PRs.

I would have guessed the opposite, since large teams tend to be more mature, and mature teams are taught to write smaller, more frequent PRs.

The likely reason for this observation is that larger teams are tending to larger repos, so even simple, atomic changes might touch many files.

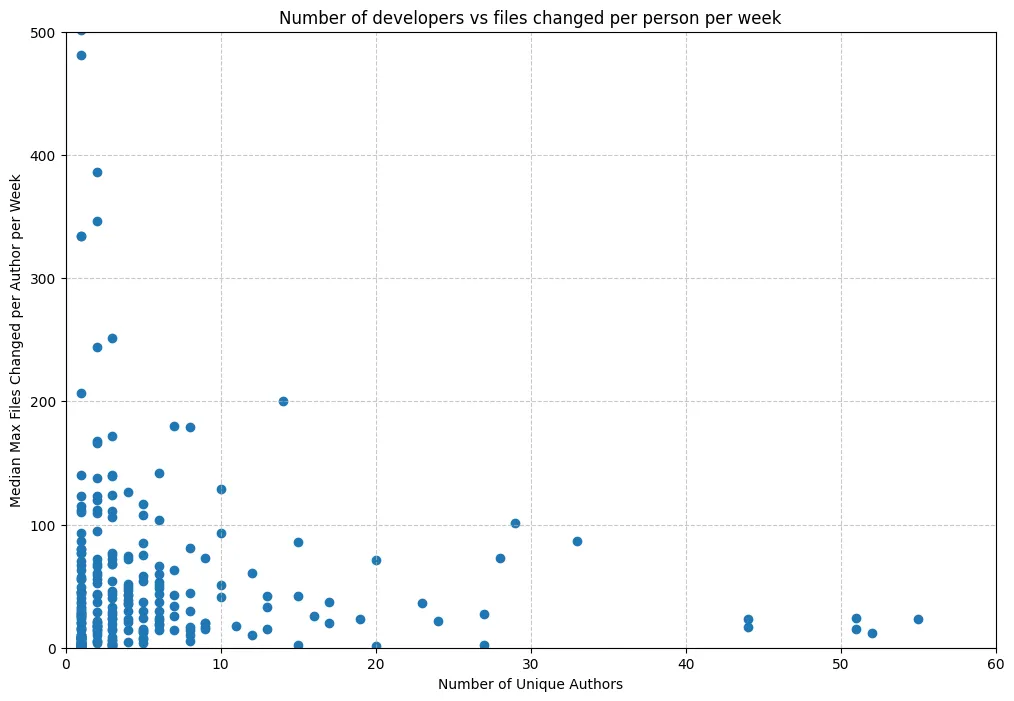

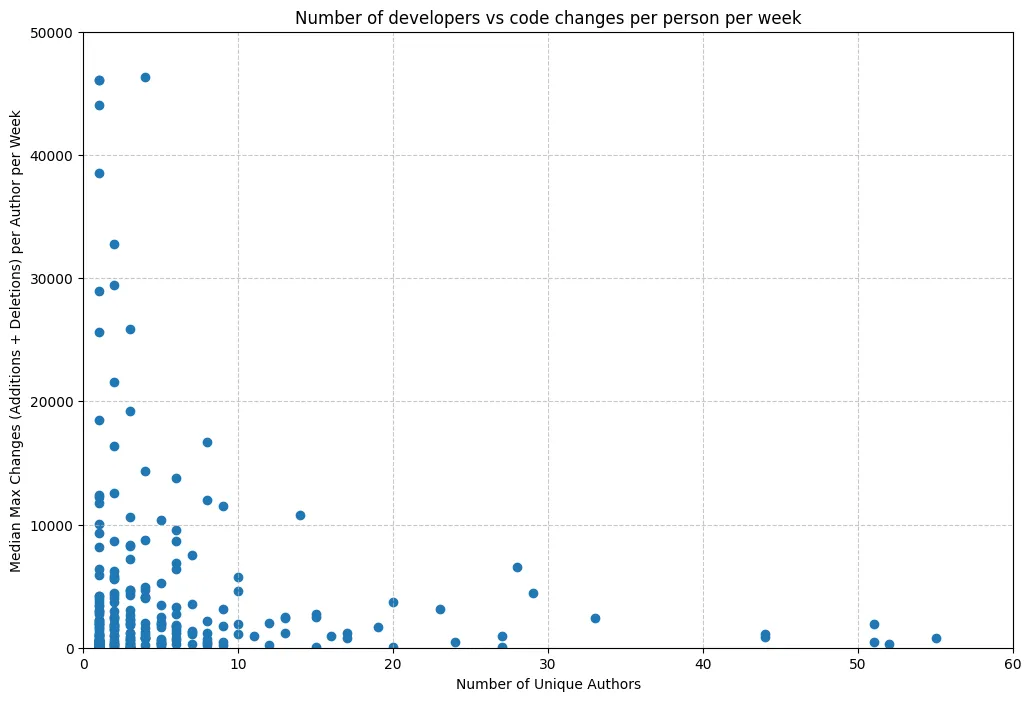

Next, I decided to plot weekly unique authors against files changed and total additions + deletions per week. The results were interesting, generally speaking, both were inversely related to the number of unique authors on the repo.

There does seem to be some inverse variation here. We can probably ignore the team of one on the left, since those are likely authors that can approve and merge their own PRs, but from there on, the larger the team gets, the fewer changes per author they make every week.

Shortcomings

One shortcoming of this methodology is that it assumes the number of code changes is a good proxy for “quantity shipped”. Those can be quite different depending on language, framework, and really even depending on the specific codebase.

Another shortcoming is that I only looked at merged PRs. I will likely write a follow-up to study rejected PRs.

I also didn’t take into account team maturity when studying teams, or any other qualitative aspects of team composition.

Lastly, context around languages and frameworks of the repos studied was missing. Files and lines changed are heavily influenced by these, so it would have been helpful to include them.

If any of these observations align with your experiences in software teams, or if you have any theories explaining the patterns we saw, I’d love to hear them! Feel free to email me -> daksh@greptile.com.