I am Daksh from Greptile. We're working on an AI code review bot that catches bugs and anti-patterns in pull requests. We heavily use LLMs for this. Naturally, a key factor of the success of our bot is the effectiveness with which the underlying model is able to find bugs given all the necessary code and context.

Bug detection is a materially different problem than code generation. The former is more difficult, but also not directionally similar to the latter.

When reasoning models started to get good late last year with the release of OpenAI o3-mini, we became interested in the possibility that reasoning models would outperform traditional LLMs at finding bugs in code.

Reasoning models are functionally similar to LLMs, except before they begin generating their response, they have a planning or thinking step. They use some tokens to solidify the user's intent, then reasoning about the steps, then starting the actual response generation.

The problem was that o3-mini isn't simply a reasoning/thinking version of another OpenAI LLM. For all we know, it's a larger model. As a result, there wasn't a clear comparison to be made.

Recently, Anthropic unveiled Claude Sonnet 3.7, the latest iteration of the Sonnet series, whose previous iteration (3.5) has been the preferred codegen model for months. Along with 3.7 they released 3.7 Thinking, the same model, but with reasoning capabilities. With this, we can do a fair evaluation.

The Evaluation Dataset

I wanted to have the dataset of bugs to cover multiple domains and languages. I picked sixteen domains, picked 2-3 self-contained programs for each domain, and used Cursor to generate each program in TypeScript, Ruby, Python, Go, and Rust.

Next I cycled through and introduced a tiny bug in each one. The type of bug I chose to introduce had to be:

- A bug that a professional developer could reasonably introduce

- A bug that could easily slip through linters, tests, and manual code review

Some examples of bugs I introduced:

- Undefined `response` variable in the ensure block

- Not accounting for amplitude normalization when computing wave stretching on a sound sample

- Hard coded date which would be accurate in most, but not all situations

At the end of this, I had 210 programs, each with a small, difficult-to-catch, and realistic bug.

A disclaimer: these bugs are the hardest-to-catch bugs I could think of, and are not representative of the median bugs usually found in everyday software.

Results

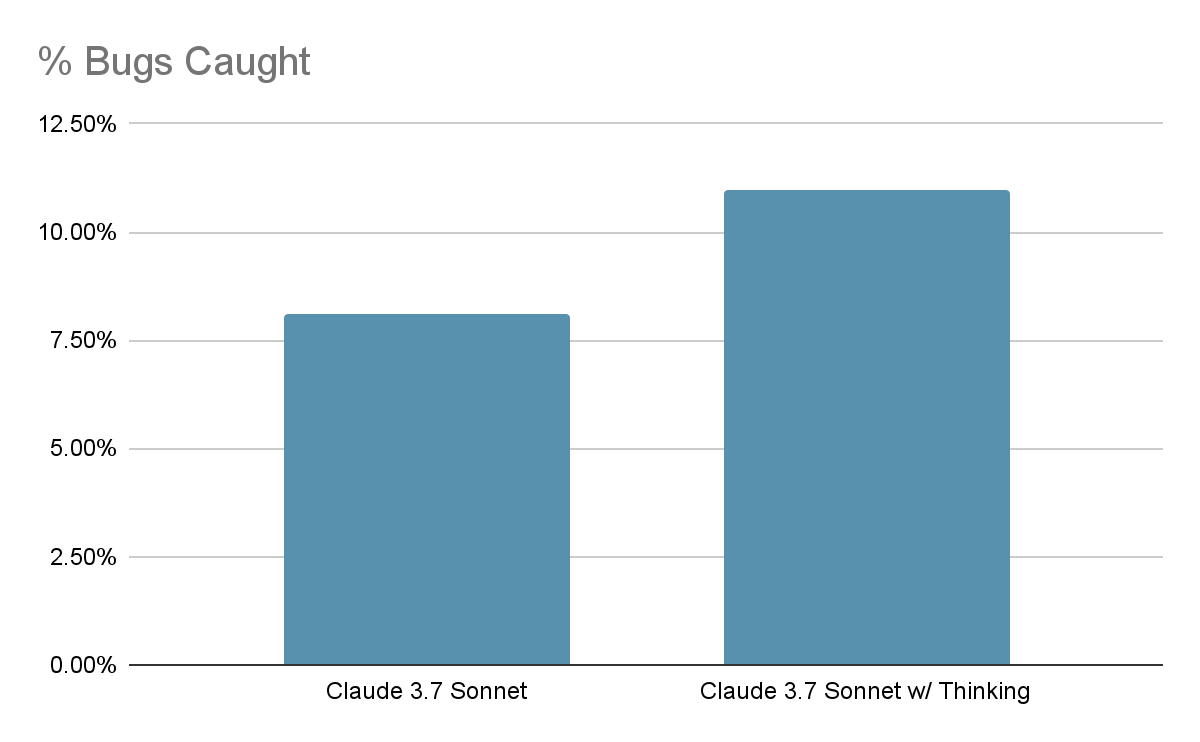

Of the 210 bugs, Claude 3.7 correctly discovered 17. The thinking version discovered 23.

Bug detection rates across all languages

The numbers being small is unsurprising to me. These were difficult bugs - and part of why I am excited about the future of AI in software verification is that we're at the earliest stages of foundational model capabilities in this realm, and AI code reviewers are already useful. One can only imagine how useful they will be a couple of years from now.

Thinking is a bigger improvement for certain languages

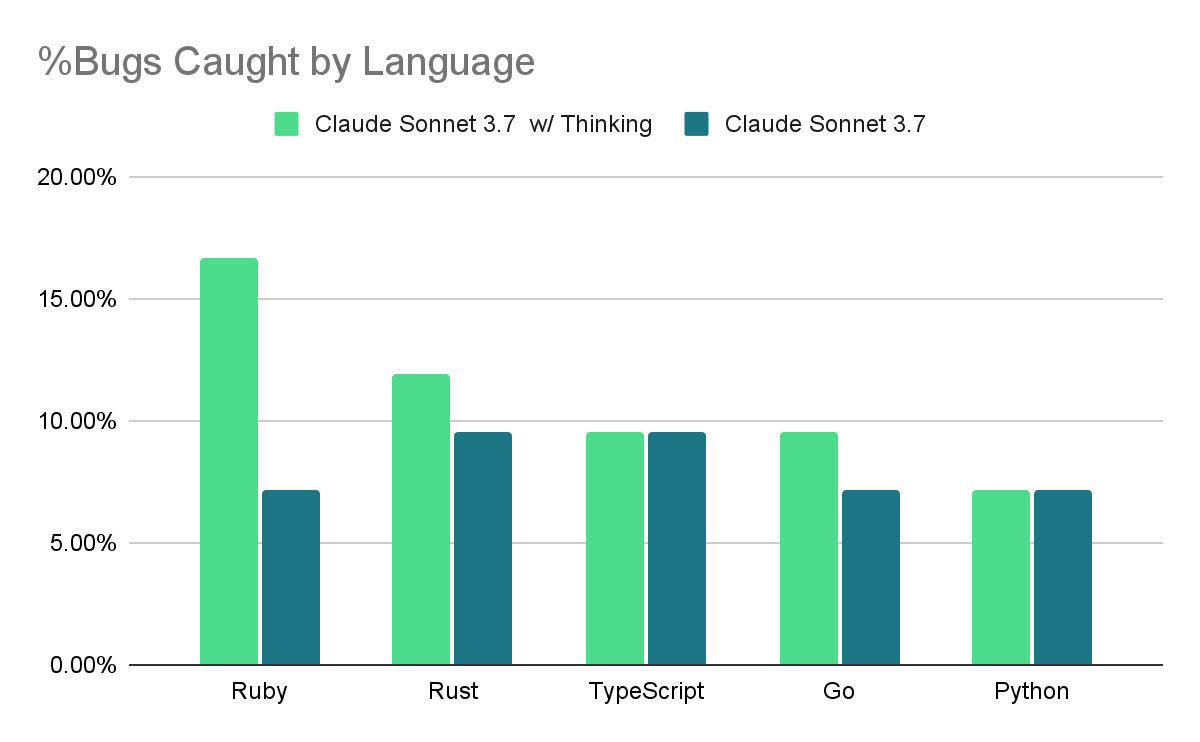

Something that surprised me was that the breakdowns had meaningful variance by language.

Bug detection rates broken down by programming language

The thinking model performed no better than the non-thinking model when catching bugs in TypeScript and Python, caught a few more in Go and Rust, and performed much better with Ruby.

A theory on why this is happening - LLMs are trained on far more TS and Python than the other languages, so even the non-thinking LLM is able to pick up on bugs by simply pattern matching. For less widely used languages like Ruby and Rust, the thinking model helped, by thinking through possible issues logically.

Interesting Bugs

-

Gain Calculation in Audio Processing Library (Ruby)

The bug in this file was in the TimeStretchProcessor class of a Ruby audio processing library, specifically in how it calculates normalize_gain. Instead of adjusting the gain based on the stretch_factor—which represents how much the audio is being sped up or slowed down—it uses a fixed formula. This means the output audio ends up with the wrong amplitude: either too loud or too quiet, depending on the stretch. The correct approach would be to scale the gain relative to the stretch to preserve consistent audio levels.

Sonnet 3.7 failed to detect this bug, but 3.7 Thinking caught it

-

Race Condition in Smart Home Notification System (Go)

The most critical bug in this code was that there was no locking around device updates before broadcasting, which could lead to race conditions where clients receive stale or partially updated device state. This occurred in the `NotifyDeviceUpdate` method of `ApiServer` which broadcasts device updates without proper synchronization with device state modifications.

In this case too, only the thinking model caught this issue.

It seems that Sonnet 3.7 Thinking is a better model for catching bugs in code than the base 3.7 model. There were no examples of Thinking missing bugs that the base 3.7 caught. Oddly, I still gravitate towards 3.7 in Cursor for code generation, likely a mix of it being faster and reasoning not being as useful for the type of codegen I do in Cursor. When it comes to detecting bugs, reasoning wins.